Originally posted on Xebia's blog.

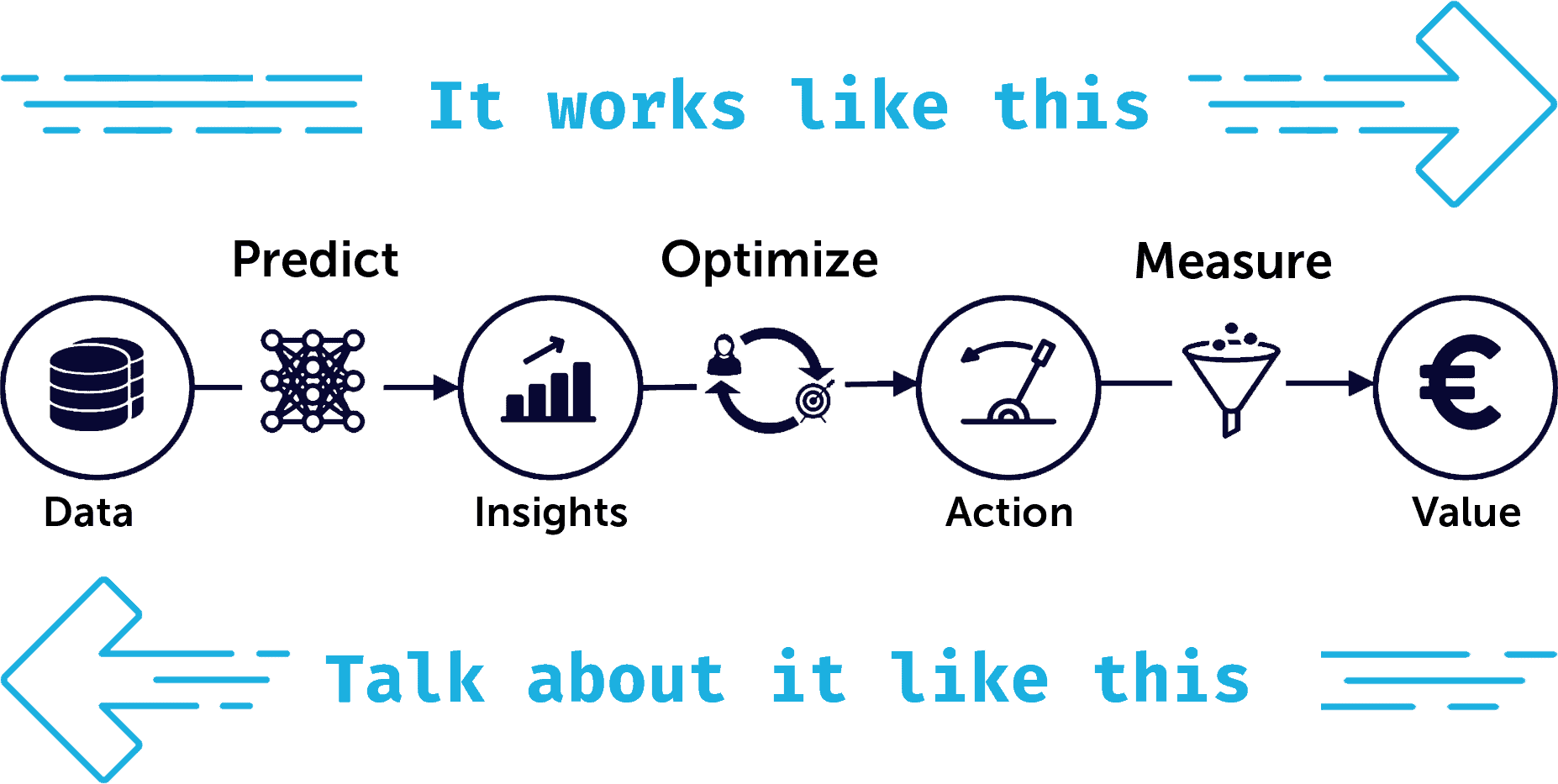

We see many data scientists struggle in explaining the use case they're working on.

And that's such a shame!

In this short post we explain the 4-step framework to connect with your audience.

Originally posted on twitter.

Titanic is tiring 🚢

Iris is irritating 🥀

MNIST is too easy 🥱

Boston makes me queasy 🤢

California housing is not so bad 🏡

Sentiment analysis just makes me sad 🥲

Here are the datasets that I gravitate to... 🧵

What about you? 🙌

Originally posted on twitter.

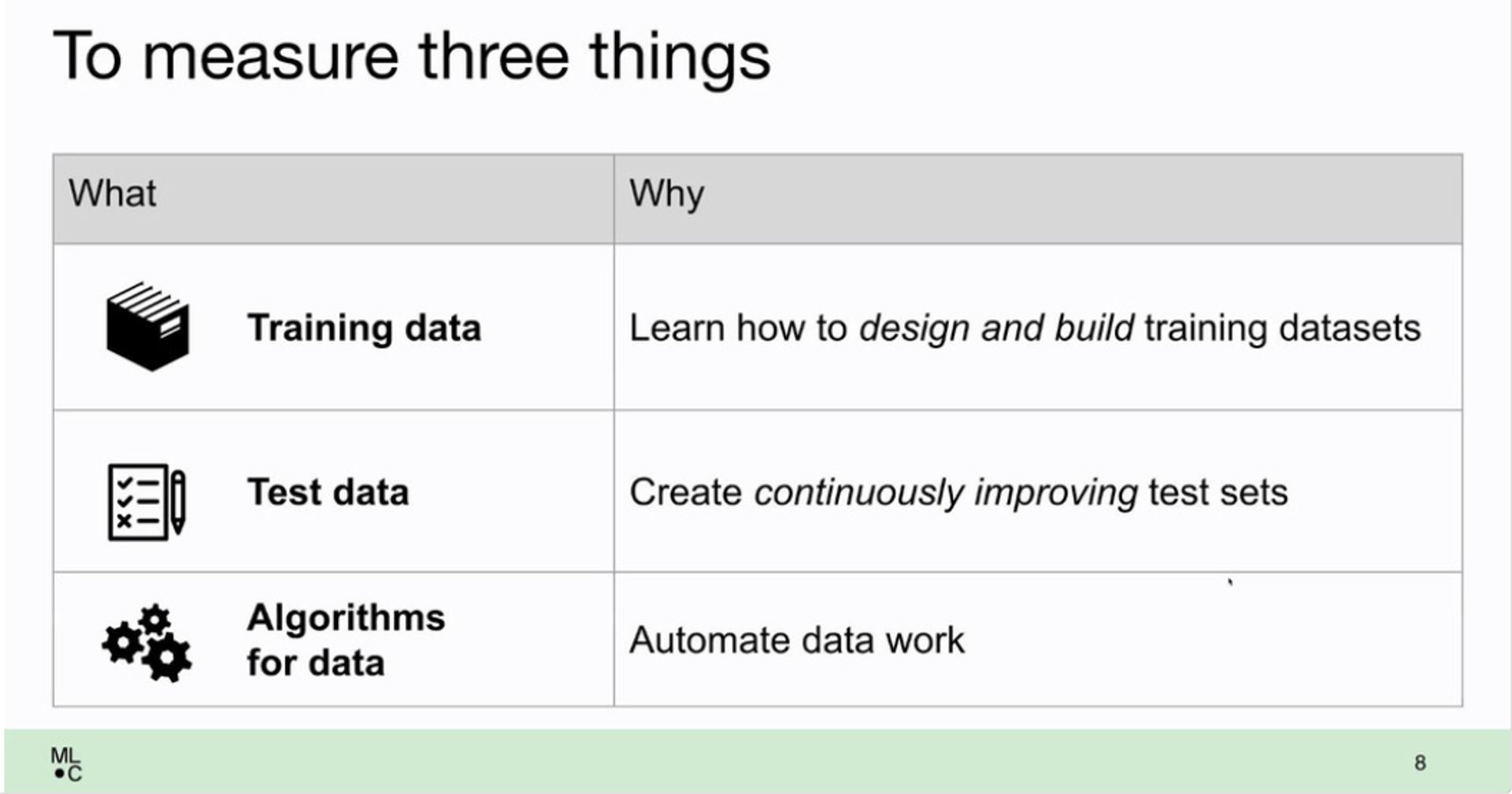

How do you iterate on the data to improve your models?

At NeurIPS I saw a talk by Peter Mattson and Praveen Paritosh about DataPerf.

They share a framework of three types of tasks and how to benchmark them:

- Training data

- Test data

- Algorithms to automate data work

Originally posted on twitter.

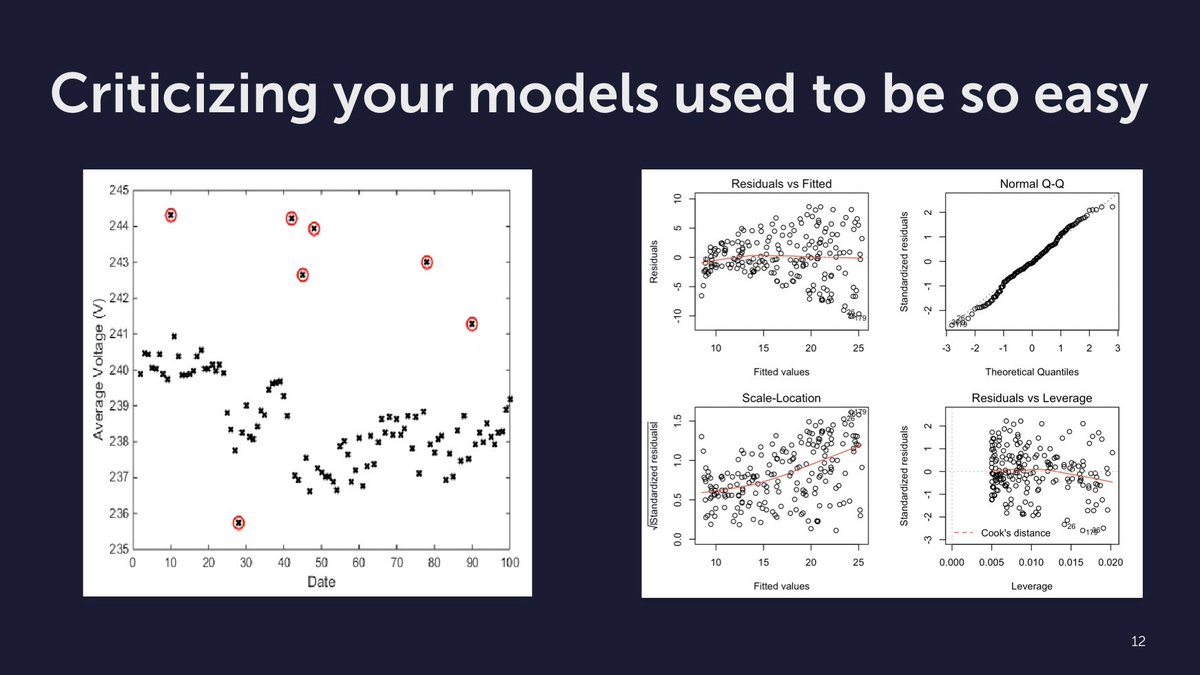

Criticizing your models is an important part of modeling.

In statistics this is well recognized.

We check things like heteroskedasticity to avoid drawing the wrong conclusions.

What do you do in machine learning?

Only check cross-validation score?

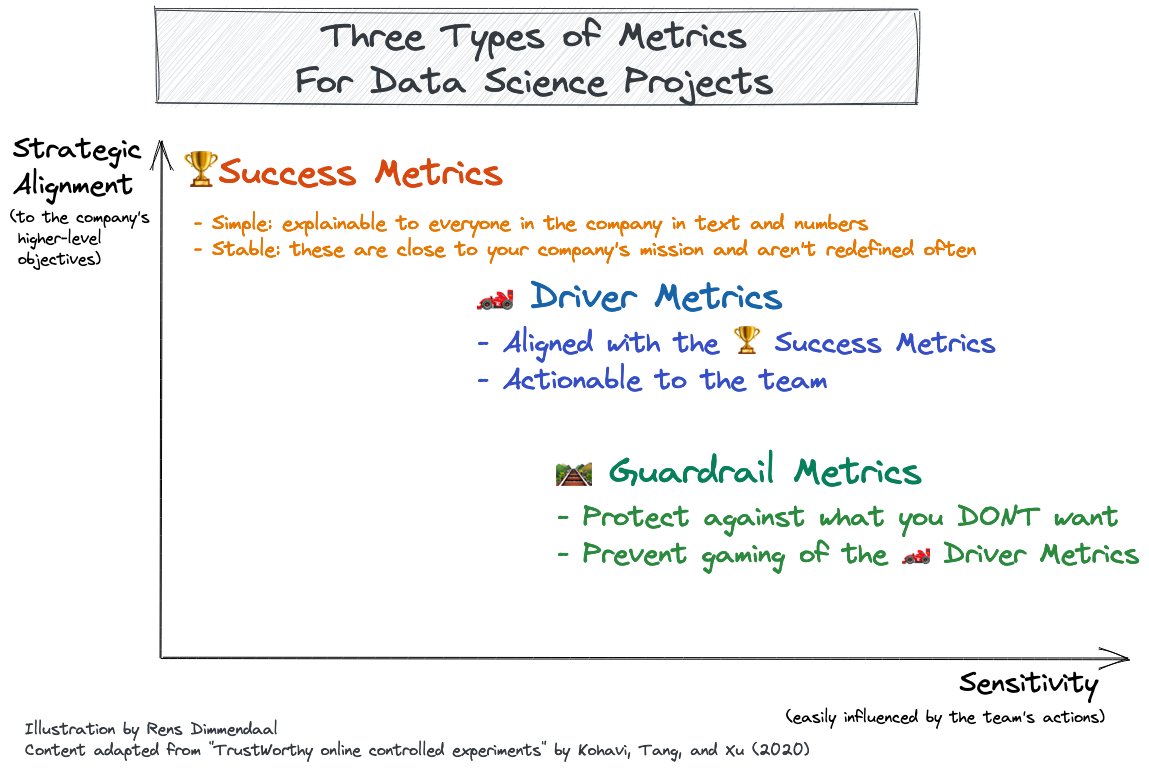

If you're working on a data science project in a professional organization you'll need to show the value you contribute. That's where metrics come in.

But choosing a metric is hard. Because there's usually multiple factors at play. I've encountered that in my daily work as a data scientist.

I've found it useful to organize metrics in three level framework. I learned about it in the book "Trustworthy Online Controlled Experiments" (Kohavi, Tang, and Xu, 2020).

I'll explain the three types of metrics below.

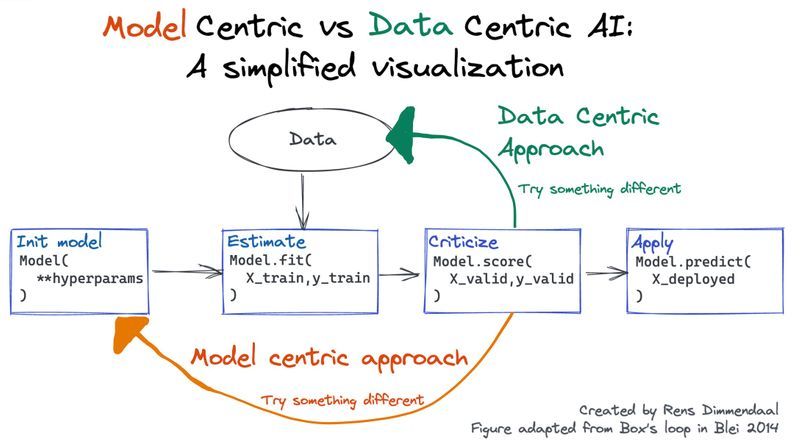

If you care about delivering value with data science you should probably care about Data-Centric AI.

Data-Centric AI is about iterating on the data instead of the model architecture to create good machine learning models.

Read the full thread on Twitter where I explain why this approach matters for practical applications.

Proud to announce that my team was one of the winners in Andrew Ng's Data-Centric AI Competition! This competition focused on improving model performance by enhancing the dataset rather than changing the model itself.

To learn more about our approach and the data-centric techniques we used, check out our detailed blog post where we share the three key tips that helped us succeed.

We also shared a blogpost on DeepLearning.AI about our experience with the competition.

This blog was originally posted at Xebia.com, my employer at the time of writing.

Andrew Ng (co-founder of Coursera, Google Brain, deeplearning.ai, landing.ai) is most famous for his Machine Learning course on Coursera. It teaches the basics of machine learning, how to create models and how to use them to predict with great accuracy.

Recently, he has introduced the concept of Data-Centric AI. The idea is that rather than treating your dataset as fixed and solely focus on improving your model setup, it focuses on improving your dataset. He argues that this is often much more effective to improve your performance.

This blog was originally posted at Xebia.com, my employer at the time of writing.

Have you ever had a conversation with a chatbot? Was it a positive experience? It might have been. But more likely than not it left you a bit frustrated. Chatbots try to understand your message and help you with an appropriate response. However, most of the time they're not that great yet. In a way chatbots are like baseball players.

"Baseball is the only field of endeavor where a man can succeed three times out of ten and be considered a good performer." — Ted Williams, baseball Hall of Famer

The same holds true for chatbots. A deflection percentage of 32% [users helped by the bot without human intervention] is what google considers a success story!

Customer Story on Google Dialogflow's website. Retrieved 20 April 2021

As a data science consultant I've worked with multiple companies on chatbots and helped them do better. During these projects I have discovered a pattern that might help others build better chatbots too. In this article I outline three tips that should help you focus on what matters.

This blog was originally posted at Xebia.com, my employer at the time of writing.

Machines may take over the world within the year;

But creating rhymes instills in us the most fear!

Luckily, pre-trained neural networks are easy to apply.

With great pride we introduce our new assistant: Rhyme with AI.